Estimating the value of Pi using Monte Carlo

Learn how to estimate the value of Pi using distributed Monte Carlo simulations powered by Flame.

Read More →

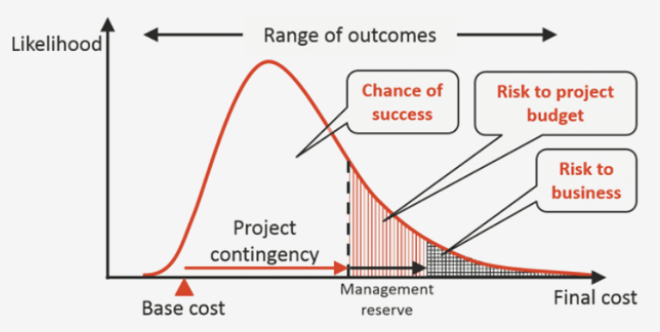

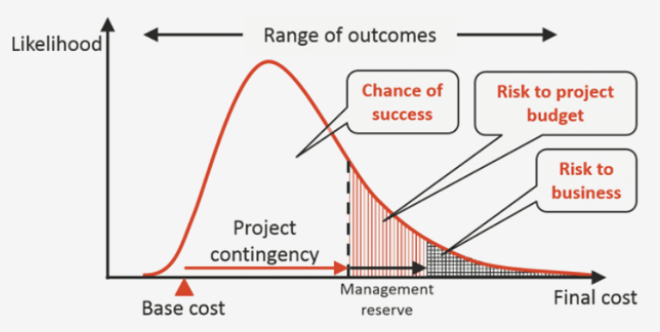

XFLOPS empowers enterprises to build serverless platforms with Flame, delivering a secure, cost-effective, and high-performance runtime environment. Flame is engineered from decades of expertise in elastic workload management, enabling it to handle the most demanding elastic workloads with exceptional efficiency and scalability, e.g. AI agents, Quant, etc.

Scale your workloads dynamically based on demand with auto-scaling capabilities and resource optimization.

Session-based authentication and authorization for secure access to your elastic workloads which are running in microVMs, e.g. AI agents, Quant, etc.

Advanced scheduling algorithms that optimize resource utilization and workload distribution across your infrastructure.

Support for various hardware configurations including GPUs, TPUs, and specialized accelerators for elastic workloads.

Optimized for maximum throughput and performance, ensuring your elastic workloads run at peak efficiency.

Designed by Cloud Native architecture, make it possible to be deployed on any cloud platform or on-premise.

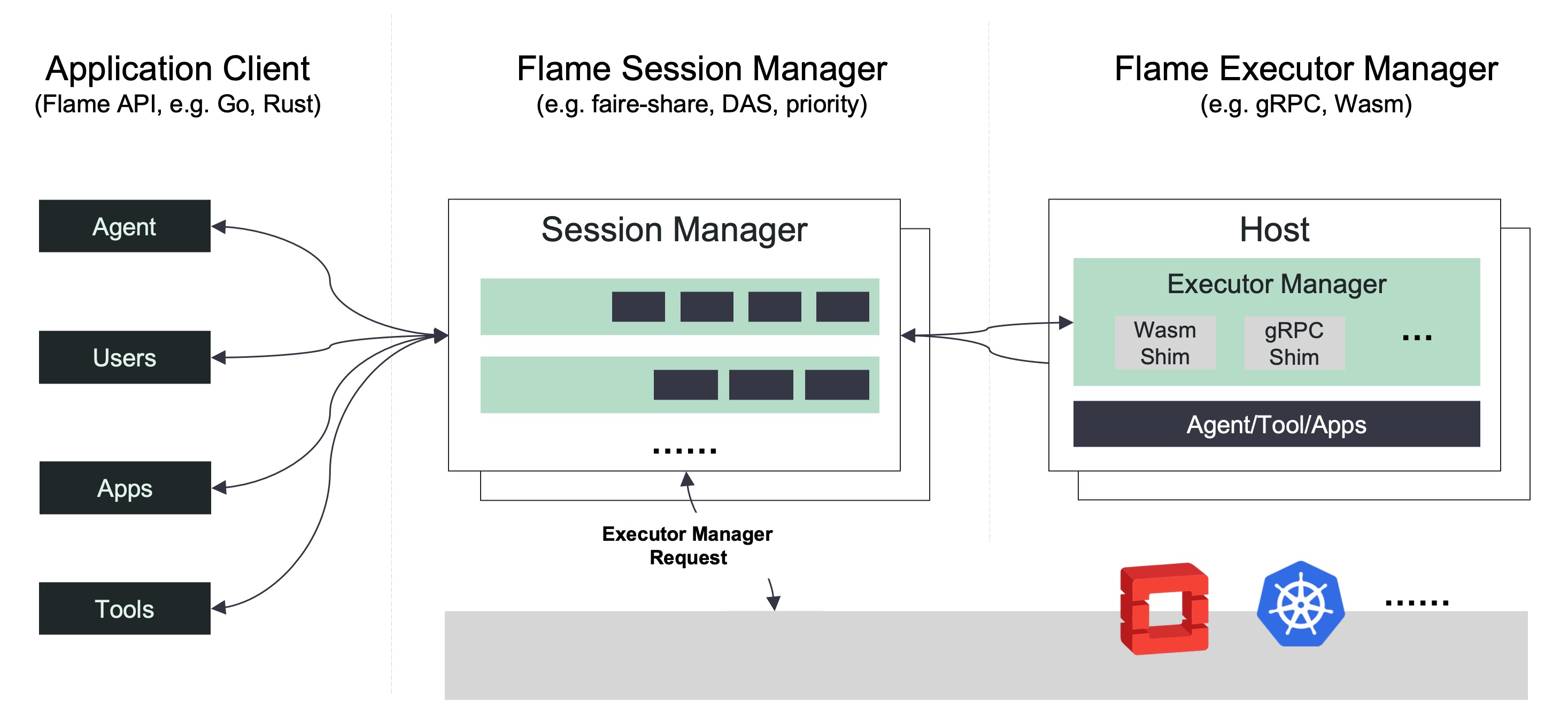

Flame is our flagship distributed engine for elastic workloads, designed to handle the most demanding elastic workloads with unprecedented efficiency and scalability, e.g. AI agents, Quant, etc.

Flame is a distributed system designed for elastic workloads, providing a comprehensive suite of mechanisms commonly required by various classes of elastic workloads, including AI/ML, HPC, Big Data, and more. Built upon over a decade and a half of experience running diverse high-performance workloads at scale across multiple systems and platforms, Flame incorporates best-of-breed ideas and practices from the open source community.

Stay updated with the latest insights, tutorials, and announcements from the XFLOPS team.

Learn how to estimate the value of Pi using distributed Monte Carlo simulations powered by Flame.

Read More →

Learn how to deploy and manage your first AI agents using Flame's intuitive orchestration platform.

Read More →

LLM is increasingly being used to generate code snippets and scripts. This blog post demonstrates how to use Flame to securely execute code generated by LLMs.

Read More →